Introducing Hunyuan3D-1.0, a game-changer on the earth of 3D asset creation. Think about producing high-quality 3D fashions in beneath 10 seconds—no extra lengthy waits or cumbersome processes. This revolutionary device combines cutting-edge AI and a two-stage framework to create sensible, multi-view photos earlier than remodeling them into exact, high-fidelity 3D property. Whether or not you’re a sport developer, product designer, or digital artist, Hunyuan3D-1.0 empowers you to hurry up your workflow with out compromising on high quality. Discover how this know-how can reshape your inventive course of and take your tasks to the following stage. The way forward for 3D asset technology is right here, and it’s sooner, smarter, and extra environment friendly than ever earlier than.

Studying Aims

- Learn the way Hunyuan3D-1.0 simplifies 3D modeling by producing high-quality property in beneath 10 seconds.

- Discover the Two-Stage Method of Hunyuan3D-1.0.

- Uncover how superior AI-driven processes like adaptive steering and super-resolution improve each velocity and high quality in 3D modeling.

- Uncover the varied use circumstances of this know-how, together with gaming, e-commerce, healthcare, and extra.

- Perceive how Hunyuan3D-1.0 opens up 3D asset creation to a broader viewers, making it sooner, cost-effective, and scalable for companies.

This text was printed as part of the Information Science Blogathon.

Options of Hunyuan3D-1.0

The individuality of Hunyuan3D-1.0 lies in its groundbreaking strategy to creating 3D fashions, combining superior AI know-how with a streamlined, two-stage course of. In contrast to conventional strategies, which require hours of guide work and complicated modeling software program, this method automates the creation of high-quality 3D property from scratch in beneath 10 seconds. It achieves this by first producing multi-view 2D photos of a product or object utilizing refined AI algorithms. These photos are then seamlessly reworked into detailed, sensible 3D fashions with a formidable stage of constancy.

What makes this proposal actually revolutionary is its means to considerably cut back the time and ability required for 3D modeling, which is often a labor-intensive and technical course of. By simplifying this into an easy-to-use system, it opens up 3D asset creation to a broader viewers, together with sport builders, digital artists, and designers who could not have specialised experience in 3D modeling. The system’s capability to generate fashions rapidly, effectively, and precisely not solely accelerates the inventive course of but additionally permits companies to scale their tasks and cut back prices.

As well as, it doesn’t simply save time—it additionally ensures high-quality outputs. The AI-driven know-how ensures that every 3D mannequin retains essential visible and structural particulars, making them good for real-time purposes like gaming or digital simulations. This proposal represents a leap ahead within the integration of AI and 3D modeling, offering an answer that’s quick, dependable, and accessible to a variety of industries.

How Hunyuan3D-1.0 WorksHow Hunyuan3D-1.0 Works

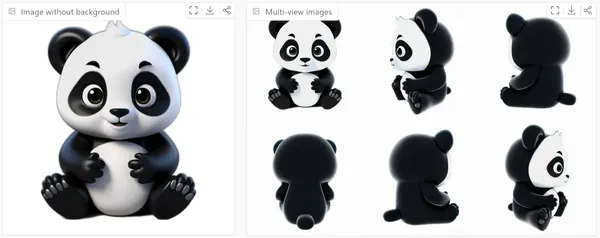

On this part, we talk about two essential levels of Hunyuan3D-1.0, which entails a multi-view diffusion mannequin for 2D-to-3D lifting and a sparse-view reconstruction mannequin.

Let’s break down these strategies to grasp how they work collectively to create high-quality 3D fashions from 2D photos.

Multi-view Diffusion Mannequin

This technique makes use of the success of diffusion fashions in producing 2D photos and extends it to create multi-view 3D photos.

- The multi-view photos are generated concurrently by organizing them in a grid.

- By scaling up the Zero-1-to-3++ mannequin, this strategy generates a 3× bigger mannequin.

- The mannequin makes use of a way referred to as “Reference consideration.” This method guides the diffusion mannequin to provide photos with textures much like a reference picture.

- This entails including an additional situation picture through the denoising course of to make sure consistency throughout generated photos.

- The mannequin renders the photographs with particular angles (elevation of 0° and a number of azimuths) and a white background.

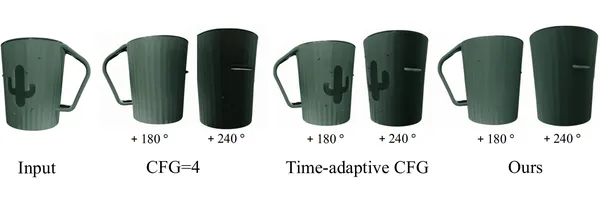

Adaptive Classifier-free Steering (CFG)

- In multi-view technology, a small CFG enhances texture element however introduces unacceptable artifacts, whereas a big CFG improves object geometry at the price of texture high quality.

- The efficiency of CFG scale values varies by view; increased scales protect extra particulars for entrance views however could result in darker again views.

- On this mannequin, adaptive CFG is proposed to regulate the CFG scale for various views and time steps.

- Intuitively, for entrance views and at early denoising time steps, increased CFG scale is ready, which is then decreased because the denoising course of progresses and because the view of the generated picture diverges from the situation picture.

- This dynamic adjustment improves each the feel high quality and the geometry of the generated fashions.

- Thus, a extra balanced and high-quality multi-view technology is achieved.

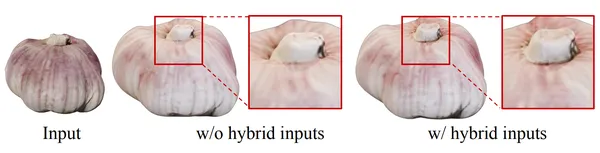

Sparse-view Reconstruction Mannequin

This mannequin helps in turning the generated multi-view photos into detailed 3D reconstructions utilizing a transformer-based strategy. The important thing to this technique is velocity and high quality, permitting the reconstruction course of to occur in lower than 2 seconds.

Hybrid Inputs

- The reconstruction mannequin makes use of each calibrated, and uncalibrated (user-provided) photos for correct 3D reconstruction.

- Calibrated photos assist information the mannequin’s understanding of the article’s construction, whereas uncalibrated photos fill in gaps, particularly for views which might be arduous to seize with normal digital camera angles (like high or backside views).

Tremendous-resolution

- One problem with 3D reconstruction is that low-resolution photos usually end in poor-quality fashions.

- To resolve this, the mannequin makes use of a “Tremendous-resolution module”.

- This module enhances the decision of triplanes (3D knowledge planes), bettering the element within the remaining 3D mannequin.

- By avoiding complicated self-attention on high-resolution knowledge, the mannequin maintains effectivity whereas reaching clearer particulars.

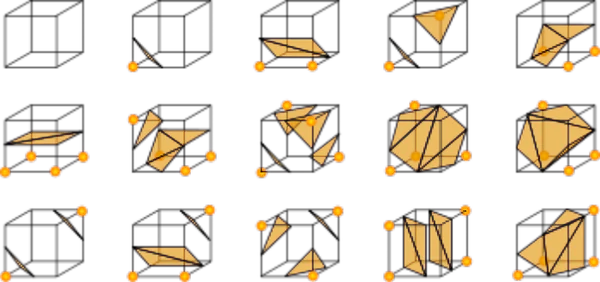

3D Illustration

- As an alternative of relying solely on implicit 3D representations (e.g., NeRF or Gaussian Splatting), this mannequin makes use of a mixture of implicit and specific representations.

- NeuS makes use of the Signed Distance Perform (SDF) to mannequin the form after which converts it into specific meshes with the Marching Cubes algorithm.

- Use these meshes instantly for texture mapping, getting ready the ultimate 3D outputs for creative refinements and real-world purposes.

Getting Began with Hunyuan3D-1.0

Clone the repository.

git clone https://github.com/tencent/Hunyuan3D-1

cd Hunyuan3D-1Set up Information for Linux

‘env_install.sh’ script file is used for establishing the setting.

# step 1, create conda env

conda create -n hunyuan3d-1 python=3.9 or 3.10 or 3.11 or 3.12

conda activate hunyuan3d-1

# step 2. set up torch realated package deal

which pip # examine pip corresponds to python

# modify the cuda model in accordance with your machine (beneficial)

pip set up torch torchvision --index-url https://obtain.pytorch.org/whl/cu121

# step 3. set up different packages

bash env_install.sh

Optionally, ‘xformers’ or ‘flash_attn’ could be put in to acclerate computation.

pip set up xformers --index-url https://obtain.pytorch.org/whl/cu121pip set up flash_attnMost setting errors are attributable to a mismatch between machine and packages. The model could be manually specified, as proven within the following profitable circumstances:

# python3.9

pip set up torch==2.0.1 torchvision==0.15.2 --index-url https://obtain.pytorch.org/whl/cu118when set up pytorch3d, the gcc model is ideally higher than 9, and the gpu driver shouldn’t be too previous.

Obtain Pretrained Fashions

The fashions can be found at https://huggingface.co/tencent/Hunyuan3D-1:

- Hunyuan3D-1/lite: lite mannequin for multi-view technology.

- Hunyuan3D-1/std: normal mannequin for multi-view technology.

- Hunyuan3D-1/svrm: sparse-view reconstruction mannequin.

To obtain the mannequin, first set up the ‘huggingface-cli’. (Detailed directions can be found right here.)

python3 -m pip set up "huggingface_hub[cli]"Then obtain the mannequin utilizing the next instructions:

mkdir weights

huggingface-cli obtain tencent/Hunyuan3D-1 --local-dir ./weights

mkdir weights/hunyuanDiT

huggingface-cli obtain Tencent-Hunyuan/HunyuanDiT-v1.1-Diffusers-Distilled --local-dir ./weights/hunyuanDiTInference

For textual content to 3d technology, it helps bilingual Chinese language and English:

python3 essential.py

--text_prompt "a beautiful rabbit"

--save_folder ./outputs/check/

--max_faces_num 90000

--do_texture_mapping

--do_renderFor picture to 3d technology:

python3 essential.py

--image_prompt "/path/to/your/picture"

--save_folder ./outputs/check/

--max_faces_num 90000

--do_texture_mapping

--do_renderUtilizing Gradio

The 2 variations of multi-view technology, std and lite could be inferenced as follows:

# std

python3 app.py

python3 app.py --save_memory

# lite

python3 app.py --use_lite

python3 app.py --use_lite --save_memory

Then the demo could be accessed via http://0.0.0.0:8080. It ought to be famous that the 0.0.0.0 right here must be X.X.X.X together with your server IP.

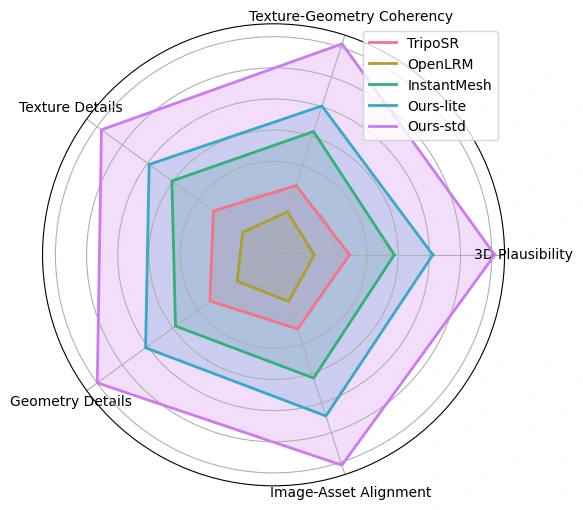

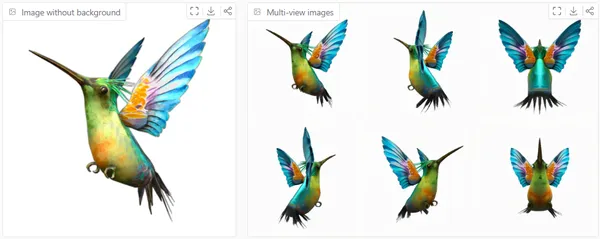

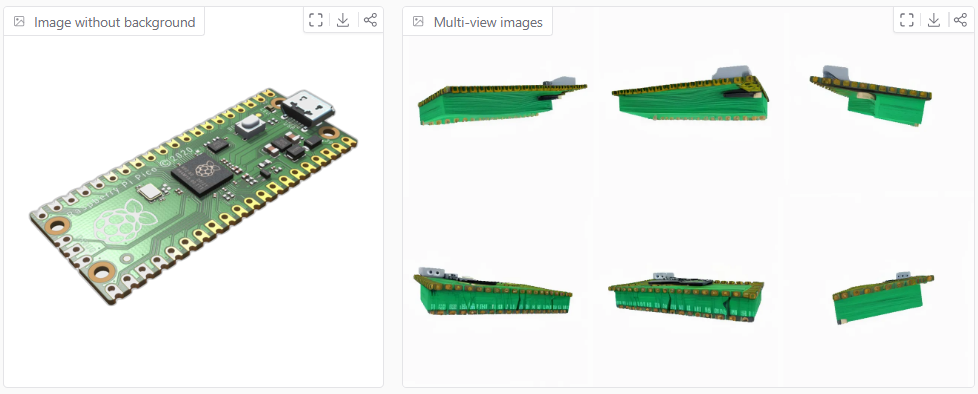

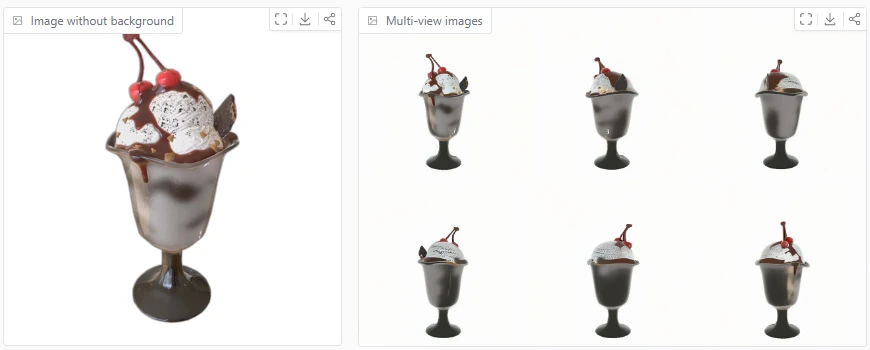

Examples of Generated Fashions

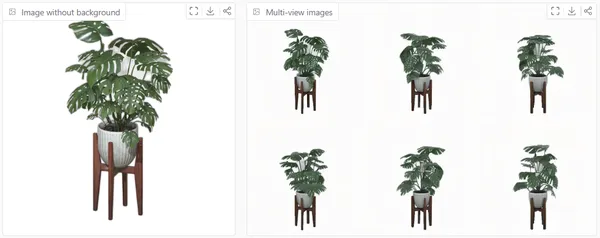

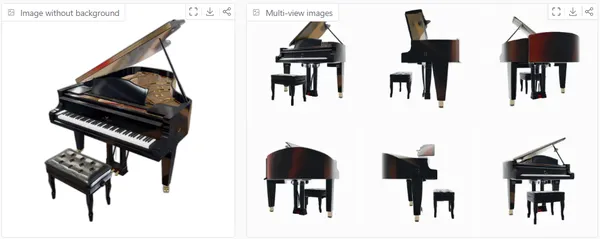

Generated utilizing Hugging Face House: https://huggingface.co/areas/tencent/Hunyuan3D-1

Example1: Buzzing Chicken

Example2:

Raspberry Pi Pico

Example3: Sundae

Example4: Monstera deliciosa

Example5: Grand Piano

Execs and Challenges of Hunyuan3D-1.0

- Excessive-quality 3D Outputs: Generates detailed and correct 3D fashions from minimal inputs.

- Velocity: Delivers instantaneous reconstructions.

- Versatility: Adapts to each calibrated and uncalibrated knowledge for various purposes.

Challenges

- Sparse-view Limitations: Struggles with uncertainties within the high and backside views as a consequence of restricted enter views.

- Complexity in Decision Scaling: Growing triplane decision provides computational challenges regardless of optimizations.

- Dependence on Giant Datasets: Requires in depth knowledge and coaching sources for high-quality outputs.

Actual-World Purposes

- Sport Improvement: Create detailed 3D property for immersive gaming environments.

- E-Commerce: Generate sensible 3D product previews for on-line purchasing.

- Digital Actuality: Construct correct 3D scenes for VR experiences.

- Healthcare: Visualize 3D anatomical fashions for medical coaching and diagnostics.

- Architectural Design: Render lifelike 3D layouts for planning and displays.

- Movie and Animation: Producing hyper-realistic visuals and CGI for films and animated productions.

- Personalised Avatars: Creating customized, lifelike avatars for social media, digital conferences, or the metaverse.

- Industrial Prototyping: Streamlining product design and testing with correct 3D prototypes.

- Training and Coaching: Offering immersive 3D studying experiences for topics like biology, engineering, or geography.

- Digital House Excursions: Enhancing actual property with interactive 3D property walkthroughs for potential consumers.

Conclusion

Hunyuan3D-1.0 represents a big leap ahead within the realm of 3D reconstruction, providing a quick, environment friendly, and extremely correct resolution for producing detailed 3D fashions from sparse inputs. By combining the facility of multi-view diffusion, adaptive steering, and sparse-view reconstruction, this revolutionary strategy pushes the boundaries of what’s attainable in real-time 3D technology. The flexibility to seamlessly combine each calibrated and uncalibrated photos, coupled with the super-resolution and specific 3D representations, opens up thrilling potentialities for a variety of purposes, from gaming and design to digital actuality. Hunyuan3D-1.0 balances geometric accuracy and texture element, revolutionizing industries reliant on 3D modeling and enhancing person experiences throughout numerous domains.

Furthermore, it permits for steady enchancment and customization, adapting to new traits in design and person wants. This stage of flexibility ensures that it stays on the forefront of 3D modeling know-how, providing companies a aggressive edge in an ever-evolving digital panorama. It’s greater than only a device—it’s a catalyst for innovation.

Key Takeaways

- The Hunyuan3D-1.0 technique effectively generates 3D fashions in beneath 10 seconds utilizing multi-view photos and sparse-view reconstruction, making it splendid for sensible purposes.

- The adaptive CFG scale improves each the geometry and texture of generated 3D fashions, making certain high-quality outcomes for various views.

- The mix of calibrated and uncalibrated inputs, together with a super-resolution strategy, ensures extra correct and detailed 3D shapes, addressing challenges confronted by earlier strategies.

- By changing implicit shapes into specific meshes, the mannequin delivers 3D fashions which might be prepared for real-world use, permitting for additional creative refinement.

- This two-stage strategy of Hunyuan3D-1.0 ensures that complicated 3D mannequin creation will not be solely sooner but additionally extra accessible, making it a robust device for industries that depend on high-quality 3D property.

References

Regularly Requested Questions

A. No, it can not utterly get rid of human intervention. Nonetheless, it may considerably enhance the event workflow by drastically decreasing the time required to generate 3D fashions, offering practically full outputs. Customers should still must make remaining refinements or changes to make sure the fashions meet particular necessities, however the course of is far sooner and extra environment friendly than conventional strategies.

A. No, Hunyuan3D-1.0 simplifies the 3D modeling course of, making it accessible even to these with out specialised abilities in 3D design. The system automates the creation of 3D fashions with minimal enter, permitting anybody to generate high-quality property rapidly.

A. The lite mannequin generates 3D mesh from a single picture in about 10 seconds on an NVIDIA A100 GPU, whereas the usual mannequin takes ~25 seconds. These occasions exclude the UV map unwrapping and texture baking processes, which add 15 seconds.

The media proven on this article will not be owned by Analytics Vidhya and is used on the Writer’s discretion.