You might need interacted with ChatGPT indirectly. Whether or not you have got requested for assist in educating a selected idea or an in depth guided step to resolve a fancy drawback.

In between, you need to present a “immediate”(quick or lengthy) to speak with the LLM to provide the specified response. Nonetheless, the true essence of those fashions isn’t just of their structure, however in how intelligently we talk with them.

That is the place immediate engineering strategies begin to occur. Proceed studying this weblog to find out about what immediate engineering is, its strategies, key elements, and a hands-on sensible information on constructing an LLM utilizing immediate engineering.

What’s Immediate Engineering?

To know immediate engineering, let’s break down the time period. The “immediate” refers to a textual content or sentence that LLM intakes as NLP and generate output. The response may very well be recursive, iterative, or incomplete.

Subsequently, immediate engineering comes into the image. It refers to crafting and optimising prompts to generate an iterative response. These responses fulfill the issue or generate output primarily based on the target desired, therefore controllable output technology.

With immediate engineering, you’re pushing an LLM right into a definitive course with an improved immediate to generate an efficient response.

Let’s perceive with an instance.

Immediate Engineering Instance

Think about your self as a tech information author. Your duties embody researching, crafting, and optimizing tech articles with a deal with rating in search engines like google and yahoo.

So, what’s a primary immediate you’ll give to an LLM? It may very well be like this:

“Draft an Search engine marketing-focused weblog submit on this “title” together with a couple of FAQs.“

It might generate a weblog submit on the given title with FAQs, however they lack factual, reader’s intent, and content material depth.

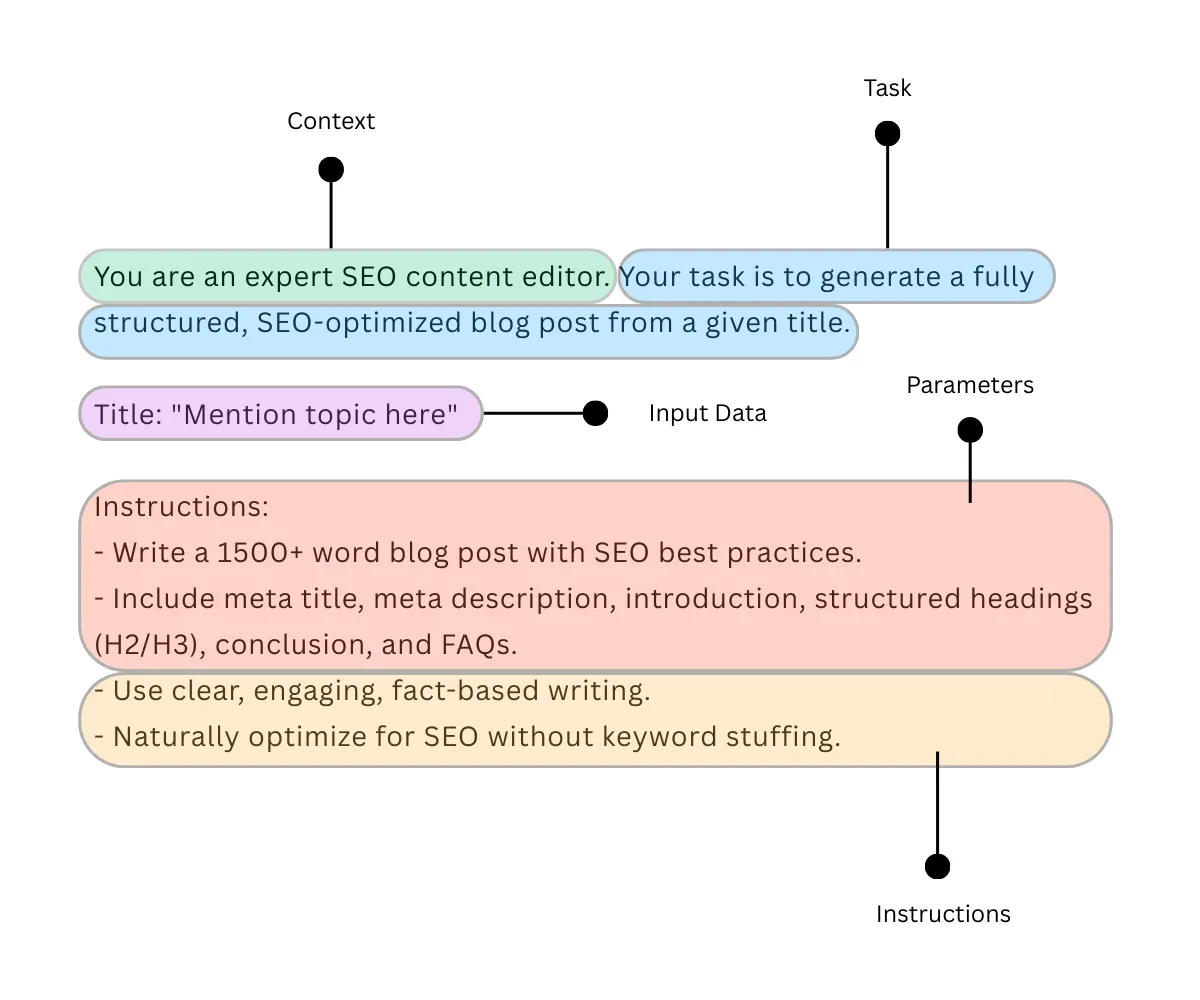

With immediate engineering, you may deal with this example successfully. Beneath is an instance of a immediate engineering script:

Immediate: “You’re an professional Search engine marketing content material editor. Your process is to generate a totally structured, Search engine marketing-optimized weblog submit from a given title.

Title: “Point out matter right here”

Directions:

– Write a 1500+ phrase weblog submit with Search engine marketing greatest practices.

– Embody meta title, meta description, introduction, structured headings (H2/H3), conclusion, and FAQs.

– Use clear, partaking, fact-based writing.

– Naturally optimize for Search engine marketing with out key phrase stuffing.“

The distinction between these two prompts is the iterative response. The primary immediate could fail to generate an in-depth article, key phrase optimisation, structured readability content material, and so forth., whereas the second immediate intelligently fulfils all of the targets.

Elements Of Immediate Engineering

You might need noticed essential issues earlier. When optimising for immediate, we outline the duty, give directions, add context, and parameters to offer an LLM a directive method for output technology.

Crucial elements of immediate engineering are as follows:

- Process: In a press release kind {that a} consumer particularly defines.

- Instruction: Present vital info to finish a process in a significant method.

- Context: Including an additional layer of knowledge to acknowledge by LLM to generate a extra related response.

- Parameters: Imposing guidelines, codecs, or constraints for the response.

- Enter Information: Present the textual content, picture, or different class of knowledge to course of.

The output generated by an LLM from a immediate engineering script can additional be optimised by way of numerous strategies. There are two classifications of immediate engineering strategies: primary and superior.

For now, we’ll focus on solely primary immediate engineering strategies for inexperienced persons.

Immediate Engineering Strategies For Learners

I’ve defined seven immediate engineering strategies in a tabular construction with examples.

| Strategies | Rationalization | Immediate Instance |

|---|---|---|

| Zero-Shot Prompting | Producing output by LLM with none examples given. | Translate the next from English to Hindi. “Tomorrow’s match can be superb.” |

| Few-Shot Prompting | Producing output by an LLM by studying from a couple of units of instance ingestion. | Translate the next from English to Hindi. “Tomorrow’s match can be superb.” For instance: Hi there → नमस्ते All good → सब अच्छा Nice Recommendation → बढ़िया सलाह |

| One-Shot Prompting | Producing output by an LLM studying from a one-example reference. | Translate the next from English to Hindi.“Tomorrow’s match can be superb.” For instance: Hi there → नमस्ते |

| Chain-of-thought (CoT) Prompting | Directing LLM to interrupt down reasoning into steps to enhance advanced process efficiency. | Clear up: 12 + 3 * (4 — 2). First, calculate 4 — 2. Then, multiply the end result by 3. Lastly, add 12. |

| Tree-of-thought (ToT) Prompting | Structuring the mannequin’s thought course of as a tree to know the processing conduct. | Think about three economists attempting to reply the query: What would be the worth of gasoline tomorrow? Every economist writes down one step of their reasoning at a time, then proceeds to the following. If at any stage one realizes their reasoning is flawed, they exit the method. |

| Meta Prompting | Guiding a mannequin to create a immediate to execute totally different duties. | Write a immediate that helps generate a abstract of any information article. |

| Reflexion | Prompting to instruct the mannequin to have a look at previous responses and enhance responses sooner or later. | Mirror on the errors made within the earlier clarification and enhance the following one. |

Now that you’ve got discovered immediate engineering strategies, let’s apply constructing an LLM utility.

Constructing LLM Purposes Utilizing Immediate Engineering

I’ve demonstrated find out how to construct a customized LLM utility utilizing immediate engineering. There are numerous methods to perform this. However I stored the method easy and beginner-friendly.

Conditions:

- An working system with a minimal of 8GB VRAM

- Obtain Python 3.13 in your system

- Obtain and set up Ollama

Goal: Creating “Search engine marketing Weblog Generator LLM” the place the mannequin takes a title and produces an Search engine marketing-optimized weblog draft.

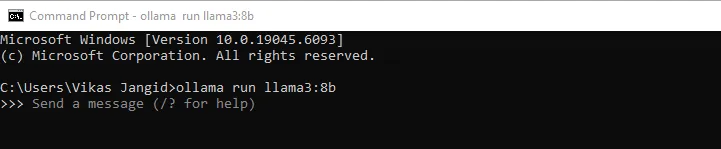

Step 1 – Putting in The Llama 3:8B Mannequin

After confirming that you’ve got happy the stipulations, head to the command line interface and set up the Llama3 8b mannequin, as that is our foundational mannequin for communication.

ollama run llama3:8b

The dimensions of the LLM is roughly 4.3 Gigabytes, so it would take a couple of minutes to obtain. You’d see successful message after obtain completion.

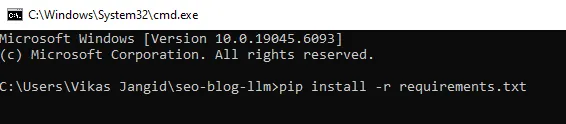

Step 2 – Getting ready Our Undertaking Information

We would require a mix of recordsdata for speaking with the LLM. It features a Python script and some necessities recordsdata.

Create a folder and title it “seo-blog-llm” and create a necessities.txt file with the next and put it aside.

ollama>=0.3.0

python-slugify>=8.0.4Now, head to the command line interface and on the mission supply path, run the next command.

pip set up -r necessities.txt

Step 3 – Creating Immediate File

In elegant editor or any code-based editor, save the next code logic with the file title prompts.py. This logic guides the LLM in find out how to reply and produce output. That is the place immediate engineering shines.

SYSTEM_PROMPT = """You're an professional Search engine marketing content material editor. You write fact-aware, reader-first articles that rank.

Observe these guidelines strictly:

- Output ONLY Markdown for the ultimate article; no explanations or preambles.

- Embody on the high a YAML entrance matter block with: meta_title, meta_description, slug, primary_keyword, secondary_keywords, word_count_target.

- Preserve meta_title ≤ 60 chars; meta_description ≤ 160 chars.

- Use H2/H3 construction, quick paragraphs, bullets, and numbered lists the place helpful.

- Preserve key phrase utilization pure (no stuffing).

- Finish with a conclusion and a 4–6 query FAQ.

- In case you insert any statistic or declare, mark it with [citation needed] (because you’re offline).

"""

USER_TEMPLATE = """Title: "{title}"

Write a {word_count}-word Search engine marketing weblog for the above title.

Constraints:

- Target market: {viewers}

- Tone: easy, informative, partaking (as if explaining to a 20-year-old)

- Geography: {geo}

- Main key phrase: {primary_kw}

- 5–8 secondary key phrases: {secondary_kws}

Format:

1) YAML entrance matter with: meta_title, meta_description, slug, primary_keyword, secondary_keywords, word_count_target

2) Intro (50–120 phrases)

3) Physique with clear H2/H3s together with the first key phrase naturally in a minimum of one H2

4) Sensible suggestions, checklists, and examples

5) Conclusion

6) FAQ (4–6 Q&As)

Guidelines:

- Don't embody “Define” or “Draft” sections.

- Don't present your reasoning or chain-of-thought.

- Preserve meta fields inside limits. If wanted, shorten.

"""Step 4 – Setting Up Python Script

That is our grasp file, which acts as a mini utility for speaking with the LLM. In elegant editor or any code-based editor, save the next code logic with the file title generator.py.

import re

import os

from datetime import datetime

from slugify import slugify

import ollama # pip set up ollama

from prompts import SYSTEM_PROMPT, USER_TEMPLATE

MODEL_NAME = "llama3:8b" # alter for those who pulled a unique tag

OUT_DIR = "output"

os.makedirs(OUT_DIR, exist_ok=True)

def build_user_prompt(

title: str,

word_count: int = 1500,

viewers: str = "newbie bloggers and content material entrepreneurs",

geo: str = "world",

primary_kw: str = None,

secondary_kws: record[str] = None,

):

if primary_kw is None:

primary_kw = title.decrease()

if secondary_kws is None:

secondary_kws = []

secondary_str = ", ".be a part of(secondary_kws) if secondary_kws else "n/a"

return USER_TEMPLATE.format(

title=title,

word_count=word_count,

viewers=viewers,

geo=geo,

primary_kw=primary_kw,

secondary_kws=secondary_str

)

def call_llm(system_prompt: str, user_prompt: str, temperature=0.4, num_ctx=8192):

# Chat-style name for higher instruction-following

resp = ollama.chat(

mannequin=MODEL_NAME,

messages=[

{"role": "system", "content": system_prompt},

{"role": "user", "content": user_prompt},

],

choices={

"temperature": temperature,

"num_ctx": num_ctx,

"top_p": 0.9,

"repeat_penalty": 1.1,

},

stream=False,

)

return resp["message"]["content"]

def validate_front_matter(md: str):

"""

Primary YAML entrance matter extraction and checks for meta size.

"""

fm = re.search(r"^---s*(.*?)s*---", md, re.DOTALL | re.MULTILINE)

points = []

meta = {}

if not fm:

points.append("Lacking YAML entrance matter block ('---').")

return meta, points

block = fm.group(1)

# naive parse (maintain easy for no dependencies)

for line in block.splitlines():

if ":" in line:

ok, v = line.cut up(":", 1)

meta[k.strip()] = v.strip().strip('"').strip("'")

# checks

mt = meta.get("meta_title", "")

mdsc = meta.get("meta_description", "")

if len(mt) > 60:

points.append(f"meta_title too lengthy ({len(mt)} chars).")

if len(mdsc) > 160:

points.append(f"meta_description too lengthy ({len(mdsc)} chars).")

if "slug" not in meta or not meta["slug"]:

# fall again to title-based slug if wanted

title_match = re.search(r'Title:s*"([^"]+)"', md)

fallback = slugify(title_match.group(1)) if title_match else f"post-{datetime.now().strftime('%YpercentmpercentdpercentHpercentM')}"

meta["slug"] = fallback

points.append("Lacking slug; auto-generated.")

return meta, points

def ensure_headers(md: str):

if "## " not in md:

return ["No H2 headers found."]

return []

def save_article(md: str, slug: str | None = None):

if not slug:

slug = slugify("article-" + datetime.now().strftime("%YpercentmpercentdpercentHpercentMpercentS"))

path = os.path.be a part of(OUT_DIR, f"{slug}.md")

with open(path, "w", encoding="utf-8") as f:

f.write(md)

return path

def generate_blog(

title: str,

word_count: int = 1500,

viewers: str = "newbie bloggers and content material entrepreneurs",

geo: str = "world",

primary_kw: str | None = None,

secondary_kws: record[str] | None = None,

):

user_prompt = build_user_prompt(

title=title,

word_count=word_count,

viewers=viewers,

geo=geo,

primary_kw=primary_kw,

secondary_kws=secondary_kws or [],

)

md = call_llm(SYSTEM_PROMPT, user_prompt)

meta, fm_issues = validate_front_matter(md)

hdr_issues = ensure_headers(md)

points = fm_issues + hdr_issues

path = save_article(md, meta.get("slug"))

return {

"path": path,

"meta": meta,

"points": points

}

if __name__ == "__main__":

parser = argparse.ArgumentParser(description="Generate Search engine marketing weblog from title")

parser.add_argument("--title", required=True, assist="Weblog title")

parser.add_argument("--words", sort=int, default=1500, assist="Goal phrase depend")

args = parser.parse_args()

end result = generate_blog(

title=args.title,

word_count=args.phrases,

primary_kw=args.title.decrease(), # easy default key phrase

secondary_kws=[],

)

print("Saved:", end result["path"])

if end result["issues"]:

print("Validation notes:")

for i in end result["issues"]:

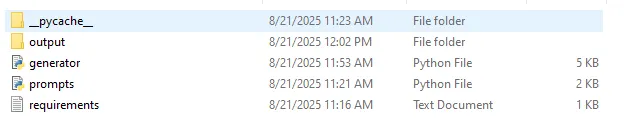

print("-", i)Simply to make sure you’re doing proper. Your mission folder ought to have the next recordsdata. Be aware that the output folder and the _pycache_ folder can be created explicitly.

Step 5 – Run It

You’re nearly carried out. Within the command line interface, run the next command to get the output. An output will routinely get saved within the output folder of your mission supply within the (.md) format file.

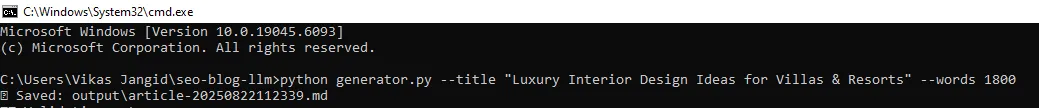

python generator.py --title "Luxurious Inside Design Concepts for Villas & Resorts" --words 1800And you’ll see one thing like this within the command line:

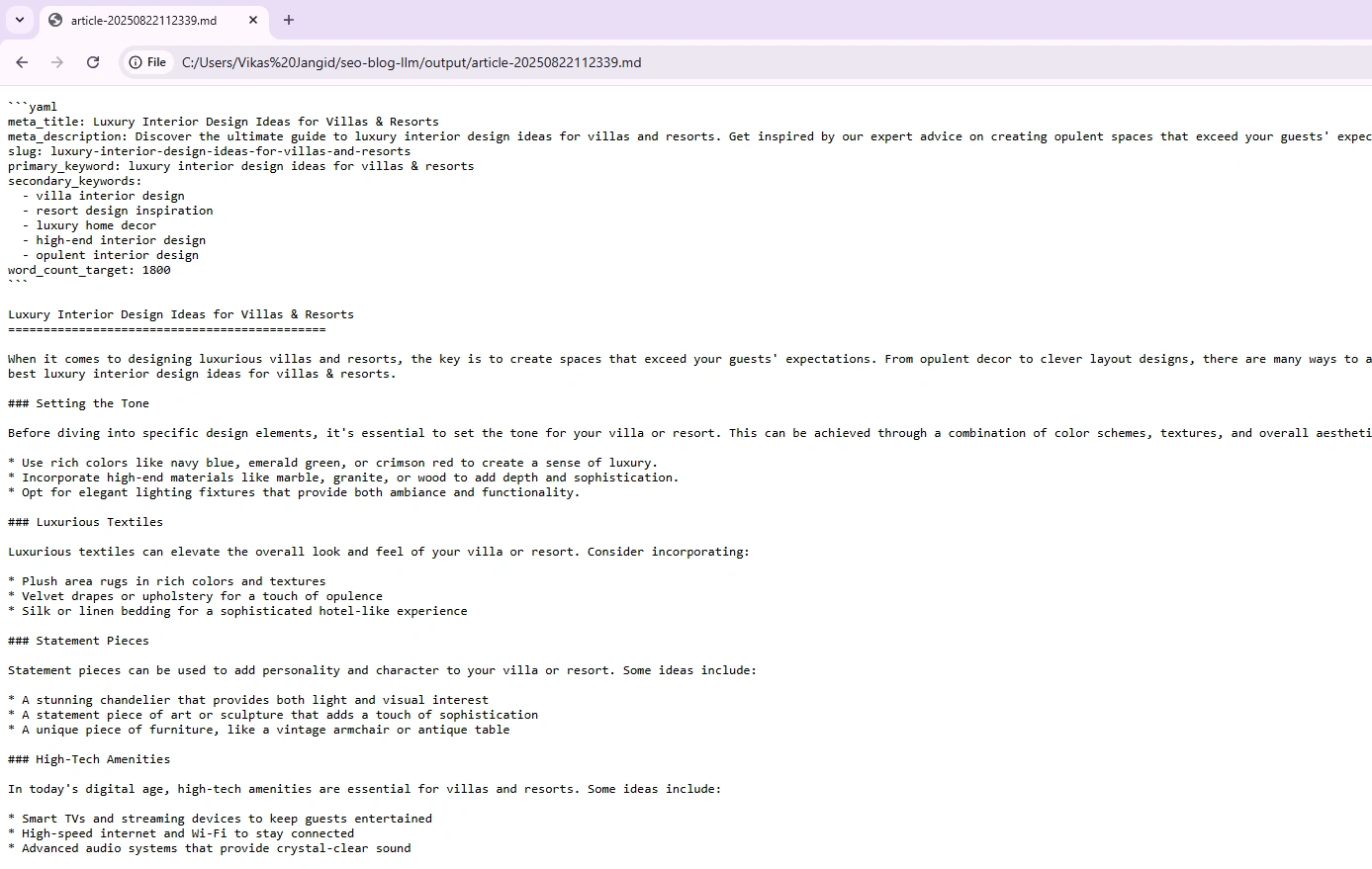

To open the generated output markdown (.md) file. Both use VS Code or drag-and-drop to any browser. Right here, I’ve used the Chrome browser to open the file, and the output seems acceptable:

Issues to bear in mind

Right here are some things to bear in mind whereas utilizing the above code:

- Operating the setup with solely 8 GB RAM led to gradual responses. For a smoother expertise, I like to recommend 12–16 GB RAM when working LLaMA 3 regionally.

- The mannequin LLama3:8B usually returned fewer than the requested phrases. The generated output is fewer than 800 phrases.

- Add passing parameters like

geo,tone, andaudiencewithin the run command to generate extra specified output.

Key Takeaway

You’ve simply constructed a customized LLM-powered utility by yourself machine. What we did was use the uncooked LLaMa 3 and formed its conduct with immediate engineering.

Right here’s a fast recap:

- Put in Ollama that permits you to run LLaMA 3 regionally.

- Pulled the LLaMA 3 8B mannequin so that you don’t depend on exterior APIs.

- Wrote immediate.py that defines find out how to instruct the mannequin.

- Wrote generator.py that acts as your mini app.

Ultimately, you have got discovered immediate engineering idea with its strategies and hands-on apply creating an LLM-powered utility.

Learn extra:

Steadily Requested Questions

A. LLMs can not generate output explicitly and subsequently require a immediate that guides them to grasp what process or info to provide.

A. Immediate engineering instructs LLM to behave logically and successfully earlier than producing the output. It means crafting particular and well-defined directions to information the LLM in producing the specified output.

A. The 4 pillars of immediate engineering are Simplicity (clear and simple), Specificity (concise and particular), Construction (logical format), and Sensitivity (honest and unbiased).

A. Sure, immediate engineering is a talent and in vogue. It requires thorough pondering in crafting efficient prompts that information LLMs in direction of desired outcomes.

A. Immediate engineers are expert professionals in understanding the enter (prompts) and excel in creating dependable and strong prompts, particularly for giant language fashions, to optimize their efficiency and guarantee they generate extremely correct and artistic outputs.

Login to proceed studying and luxuriate in expert-curated content material.