Overview

On this information, you’ll:

- Achieve a high-level understanding of vectors, embeddings, vector search, and vector databases, which is able to make clear the ideas we are going to construct upon.

- Discover ways to use the Rockset console with OpenAI embeddings to carry out vector-similarity searches, forming the spine of our recommender engine.

- Construct a dynamic internet utility utilizing vanilla CSS, HTML, JavaScript, and Flask, seamlessly integrating with the Rockset API and the OpenAI API.

- Discover an end-to-end Colab pocket book that you could run with none dependencies in your native working system: Recsys_workshop.

Introduction

An actual-time customized recommender system can add large worth to a company by enhancing the extent consumer engagement and in the end growing consumer satisfaction.

Constructing such a advice system that offers effectively with high-dimensional knowledge to search out correct, related, and related gadgets in a big dataset requires efficient and environment friendly vectorization, vector indexing, vector search, and retrieval which in flip calls for sturdy databases with optimum vector capabilities. For this put up, we are going to use Rockset because the database and OpenAI embedding fashions to vectorize the dataset.

Vector and Embedding

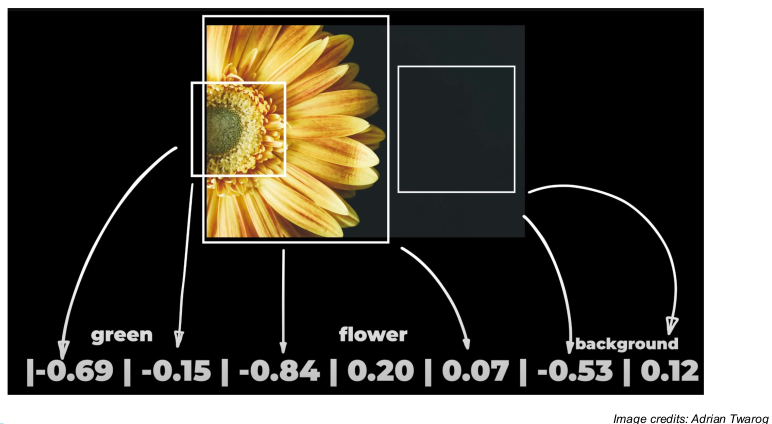

Vectors are structured and significant projections of knowledge in a steady area. They condense vital attributes of an merchandise right into a numerical format whereas guaranteeing grouping related knowledge intently collectively in a multidimensional space. For instance, in a vector area, the gap between the phrases “canine” and “pet” can be comparatively small, reflecting their semantic similarity regardless of the distinction of their spelling and size.

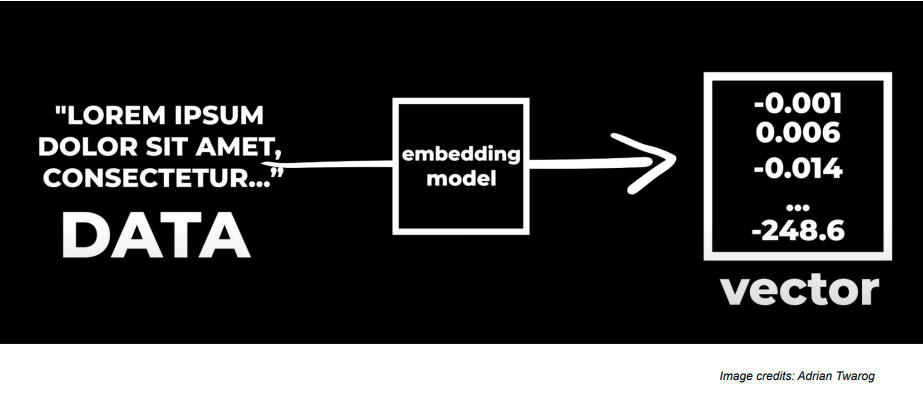

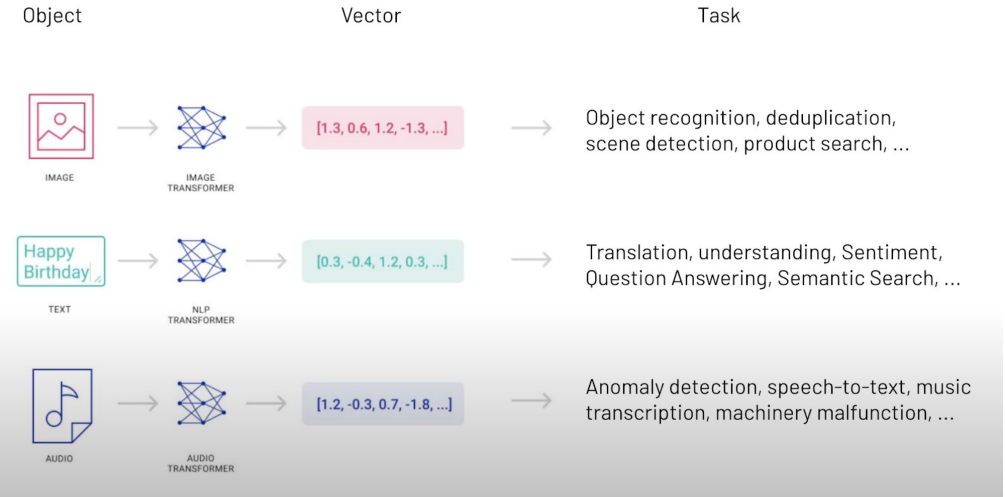

Embeddings are numerical representations of phrases, phrases, and different knowledge types.Now, any type of uncooked knowledge could be processed via an AI-powered embedding mannequin into embeddings as proven within the image under. These embeddings could be then used to make varied purposes and implement quite a lot of use instances.

A number of AI fashions and methods can be utilized to create these embeddings. For example, Word2Vec, GLoVE, and transformers like BERT and GPT can be utilized to create embeddings. On this tutorial, we’ll be utilizing OpenAI’s embeddings with the “text-embedding-ada-002” mannequin.

Purposes comparable to Google Lens, Netflix, Amazon, Google Speech-to-Textual content, and OpenAI Whisper, use embeddings of pictures, textual content, and even audio and video clips created by an embedding mannequin to generate equal vector representations. These vector embeddings very nicely protect the semantic info, advanced patterns, and all different higher-dimensional relationships within the knowledge.

Vector Search?

It’s a way that makes use of vectors to conduct searches and establish relevance amongst a pool of knowledge. Not like conventional key phrase searches that make use of tangible key phrase matches, vector search captures semantic contextual which means as nicely.

Resulting from this attribute, vector search is able to uncovering relationships and similarities that conventional search strategies may miss. It does so by changing knowledge into vector representations, storing them in vector databases, and utilizing algorithms to search out essentially the most related vectors to a question vector.

Vector Database

Vector databases are specialised databases the place knowledge is saved within the type of vector embeddings. To cater to the advanced nature of vectorized knowledge, a specialised and optimized database is designed to deal with the embeddings in an environment friendly method. To make sure that vector databases present essentially the most related and correct outcomes, they make use of the vector search.

A production-ready vector database will remedy many, many extra “database” issues than “vector” issues. On no account is vector search, itself, an “straightforward” drawback, however the mountain of conventional database issues {that a} vector database wants to unravel actually stays the “laborious half.” Databases remedy a number of very actual and really well-studied issues from atomicity and transactions, consistency, efficiency and question optimization, sturdiness, backups, entry management, multi-tenancy, scaling and sharding and far more. Vector databases would require solutions in all of those dimensions for any product, enterprise or enterprise. Learn extra on challenges associated to Scaling Vector Search right here.

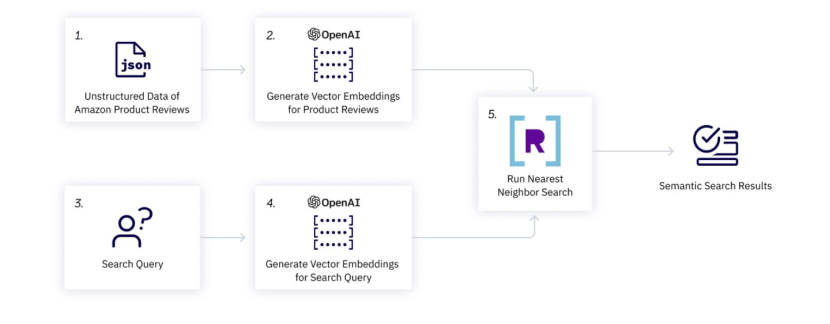

Overview of the Advice WebApp

The image under exhibits the workflow of the applying we’ll be constructing. Now we have unstructured knowledge i.e., recreation evaluations in our case. We’ll generate vector embeddings for all of those evaluations via OpenAI mannequin and retailer them within the database. Then we’ll use the identical OpenAI mannequin to generate vector embeddings for our search question and match it with the assessment vector embeddings utilizing a similarity operate comparable to the closest neighbor search, dot product or approximate neighbor search. Lastly, we could have our high 10 suggestions able to be displayed.

Steps to construct the Recommender System utilizing Rockset and OpenAI Embedding

Let’s start with signing up for Rockset and OpenAI after which dive into all of the steps concerned inside the Google Colab pocket book to construct our advice webapp:

Step 1: Signal-up on Rockset

Signal-up and create an API key to make use of within the backend code. Put it aside within the atmosphere variable with the next code:

import os

os.environ["ROCKSET_API_KEY"] = "XveaN8L9mUFgaOkffpv6tX6VSPHz####"

Step 2: Create a brand new Assortment and Add Knowledge

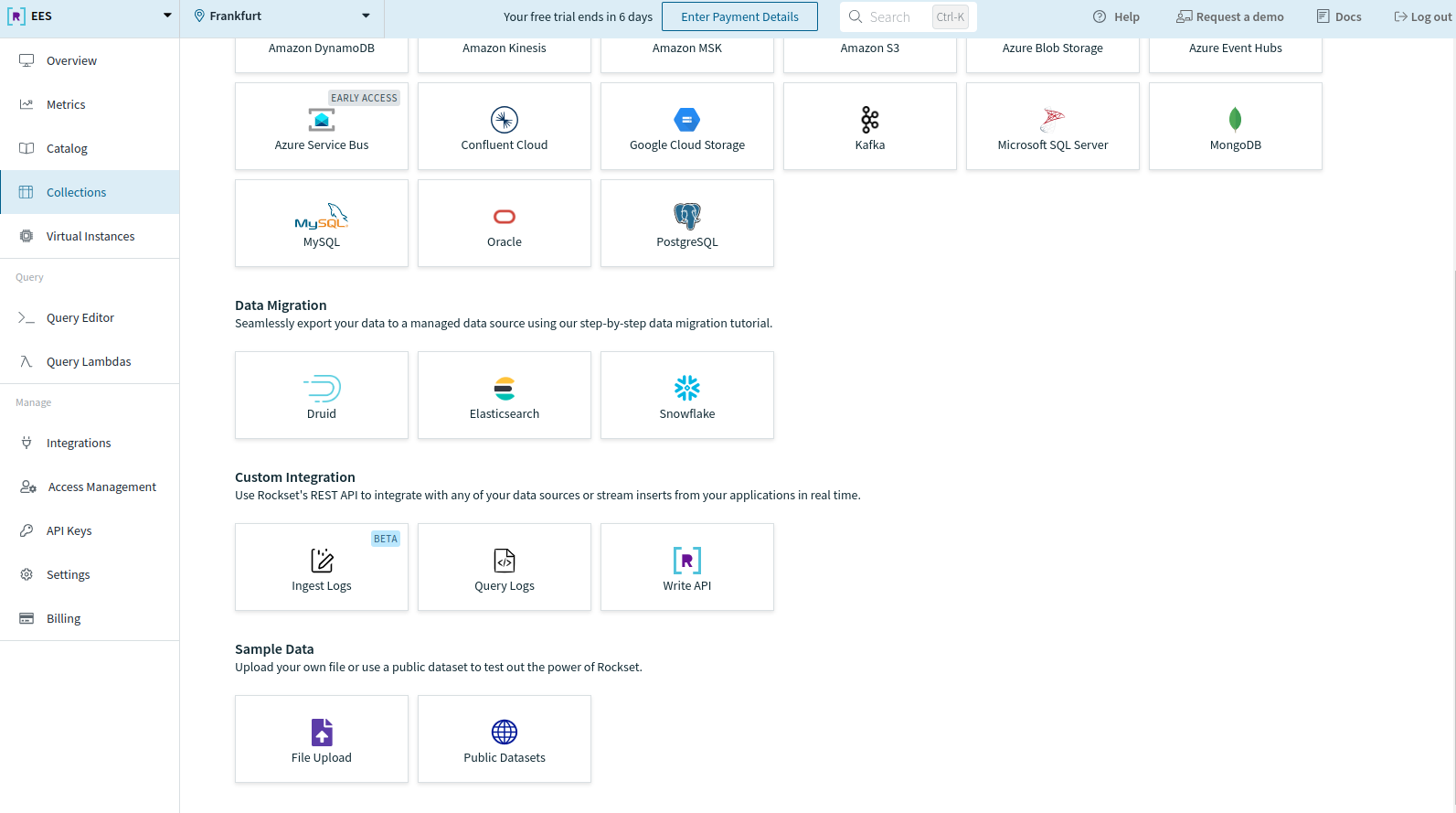

After making an account, create a brand new assortment out of your Rockset console. Scroll to the underside and select File Add underneath Pattern Knowledge to add your knowledge.

For this tutorial, we’ll be utilizing Amazon product assessment knowledge. The vectorized type of the info is obtainable to obtain right here. Obtain this in your native machine so it may be uploaded to your assortment.

You’ll be directed to the next web page. Click on on Begin.

You need to use JSON, CSV, XML, Parquet, XLS, or PDF file codecs to add the info.

Click on on the Select file button and navigate to the file you need to add. This may take a while. After the file is uploaded efficiently, you’ll have the ability to assessment it underneath Supply Preview.

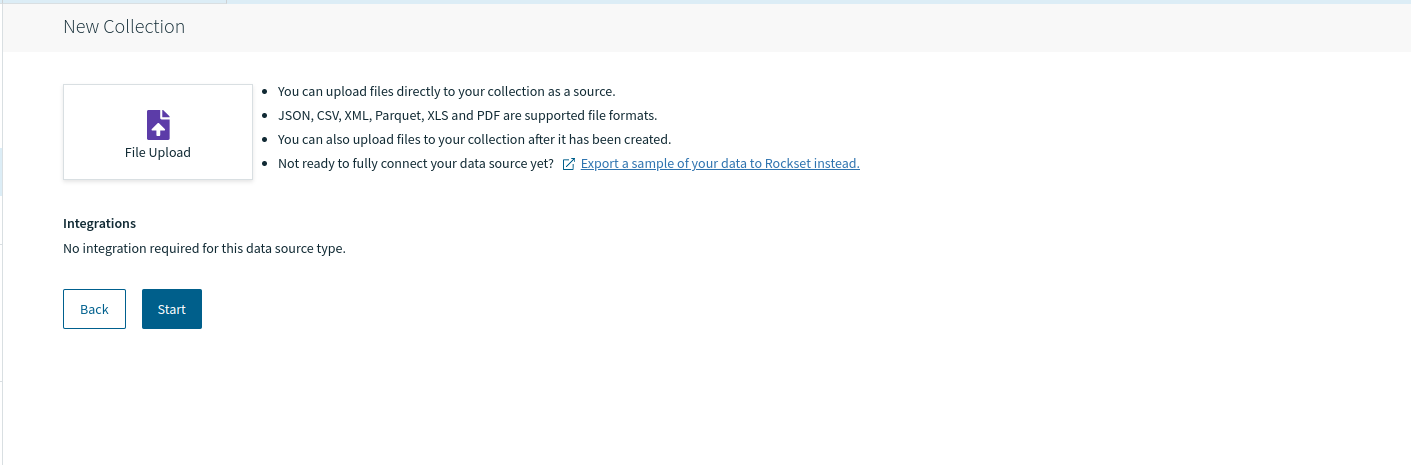

We’ll be importing the sample_data.json file after which clicking on Subsequent. You’ll be directed to the SQL transformation display to carry out transformations or characteristic engineering as per your wants.

As we don’t need to apply any transformation now, we’ll transfer on to the following step by clicking Subsequent.

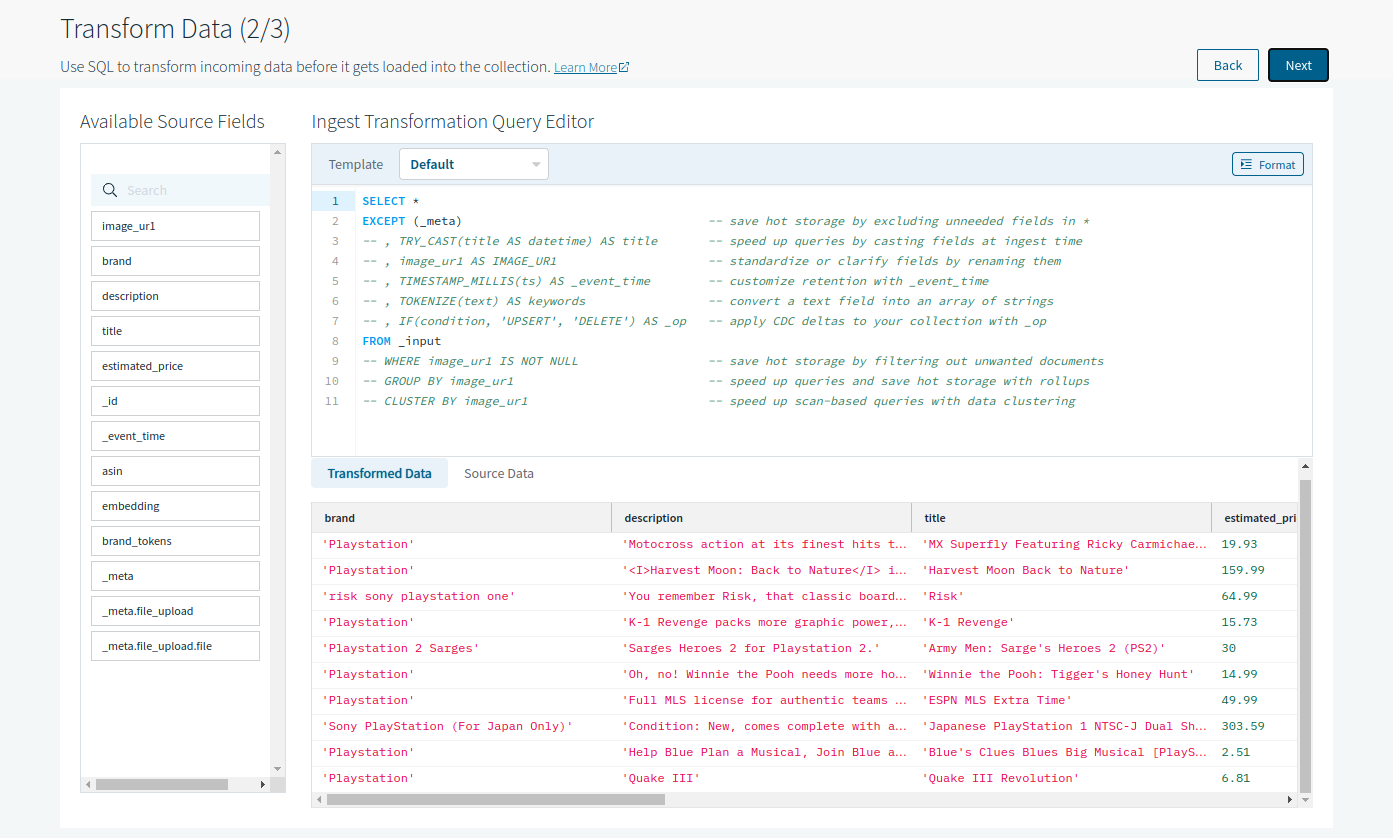

Now, the configuration display will immediate you to decide on your workspace (‘commons’ chosen by default) together with Assortment Title and a number of other different assortment settings.

We’ll title our assortment “pattern” and transfer ahead with default configurations by clicking Create.

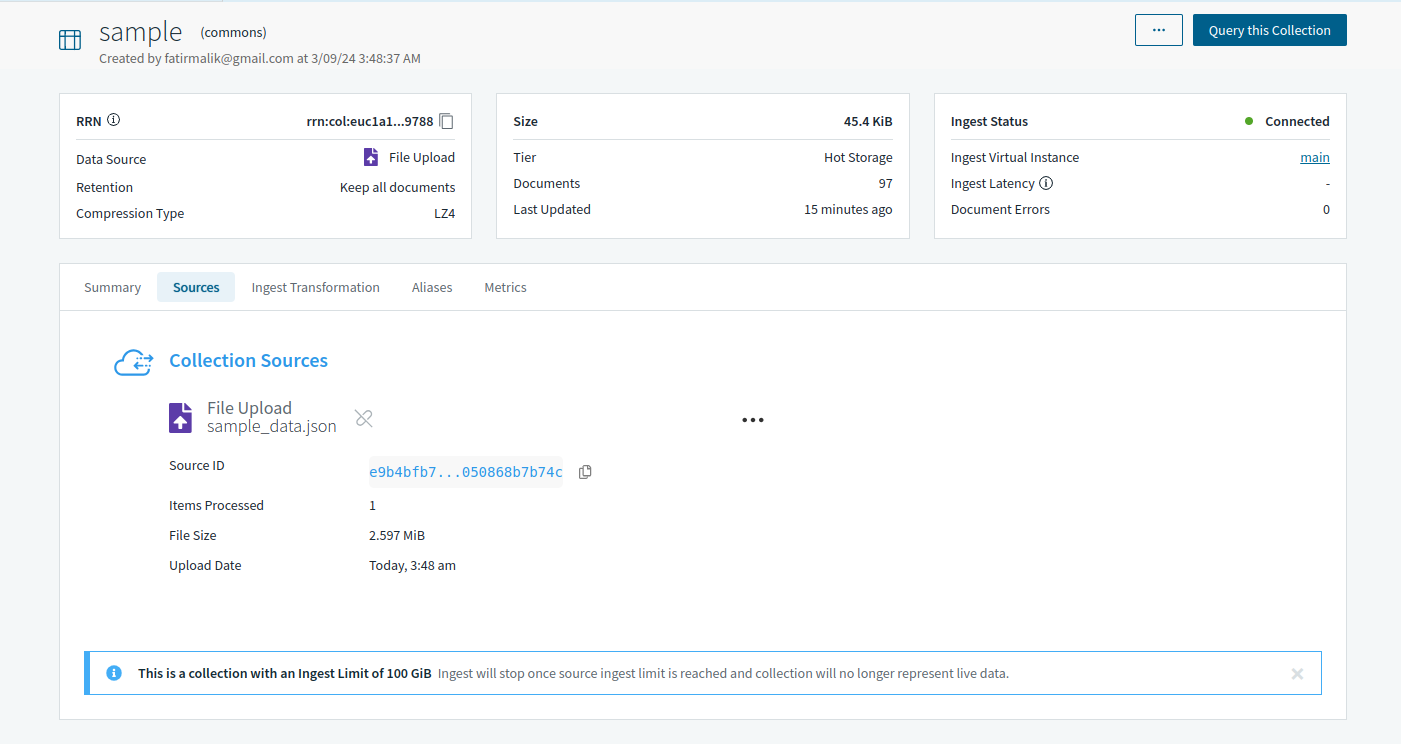

Lastly, your assortment can be created. Nevertheless, it’d take a while earlier than the Ingest Standing adjustments from Initializing to Related.

As soon as the standing is up to date, Rockset’s question device can question the gathering through the Question this Assortment button on the right-top nook within the image under.

Step 3: Create OpenAI API Key

To transform knowledge into embeddings, we’ll use an OpenAI embedding mannequin. Signal-up for OpenAI after which create an API key.

After signing up, go to API Keys and create a secret key. Don’t overlook to repeat and save your key. Just like Rockset’s API key, save your OpenAI key in your atmosphere so it will probably simply be used all through the pocket book:

import os

os.environ["OPENAI_API_KEY"] = "sk-####"

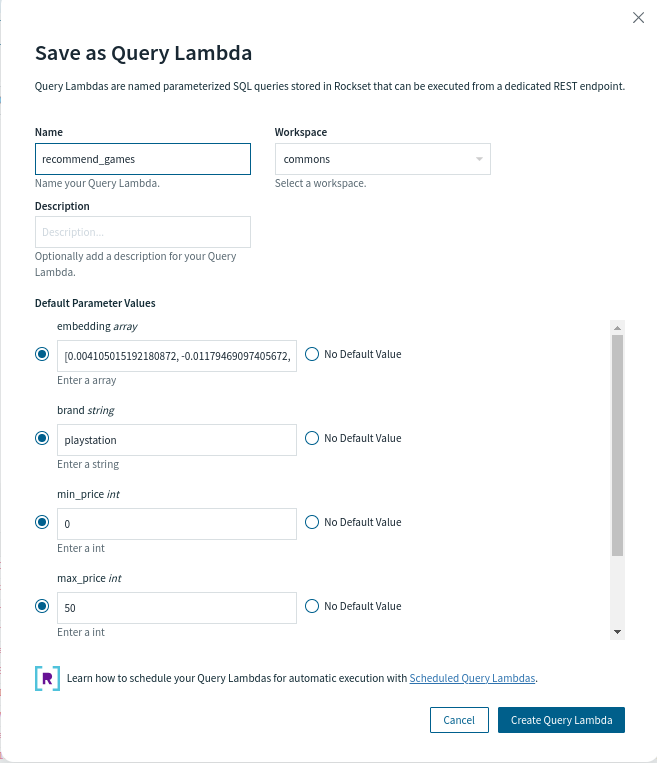

Step 4: Create a Question Lambda on Rockset

Rockset permits its customers to make the most of the flexibleness and luxury of a managed database platform to the fullest via Question Lambdas. These parameterized SQL queries could be saved in Rocket as a separate useful resource after which executed on the run with the assistance of devoted REST endpoints.

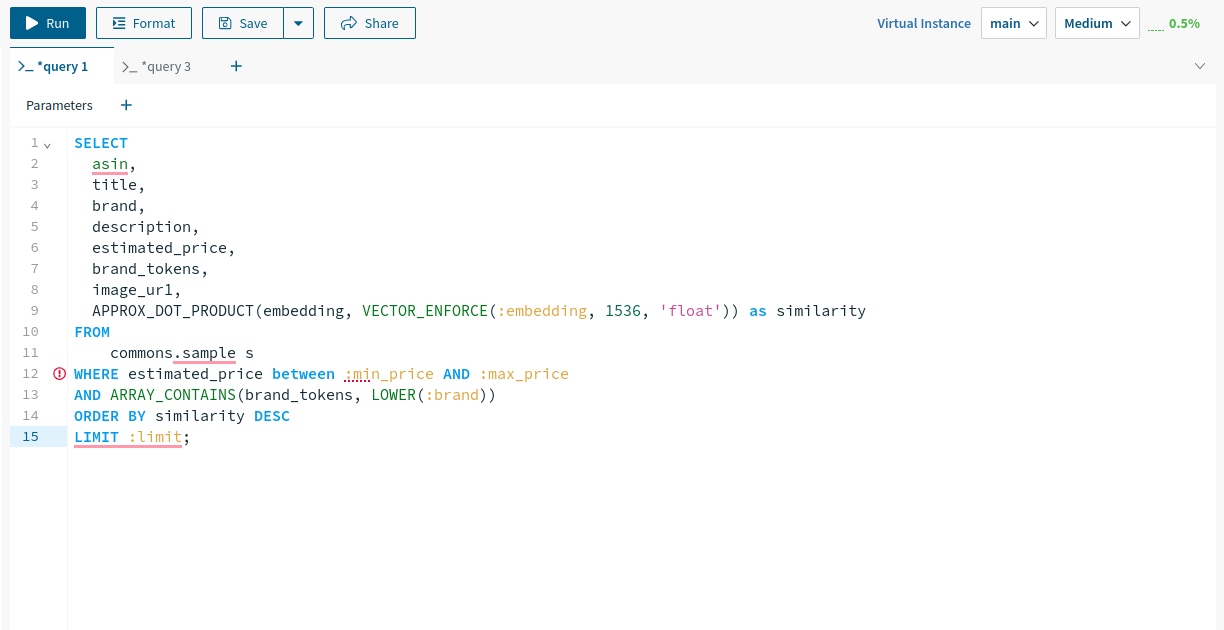

Let’s create one for our tutorial. We’ll be utilizing the next Question Lambda with parameters: embedding, model, min_price, max_price and restrict.

SELECT

asin,

title,

model,

description,

estimated_price,

brand_tokens,

image_ur1,

APPROX_DOT_PRODUCT(embedding, VECTOR_ENFORCE(:embedding, 1536, 'float')) as similarity

FROM

commons.pattern s

WHERE estimated_price between :min_price AND :max_price

AND ARRAY_CONTAINS(brand_tokens, LOWER(:model))

ORDER BY similarity DESC

LIMIT :restrict;

This parameterized question does the next:

- retrieves knowledge from the “pattern” desk within the “commons” schema. And selects particular columns like ASIN, title, model, description, estimated_price, brand_tokens, and image_ur1.

- computes the similarity between the supplied embedding and the embedding saved within the database utilizing the APPROX_DOT_PRODUCT operate.

- filters outcomes primarily based on the estimated_price falling inside the supplied vary and the model containing the desired worth. Subsequent, the outcomes are sorted primarily based on similarity in descending order.

- Lastly, the variety of returned rows are restricted primarily based on the supplied ‘restrict’ parameter.

To construct this Question Lambda, question the gathering made in step 2 by clicking on Question this assortment and pasting the parameterized question above into the question editor.

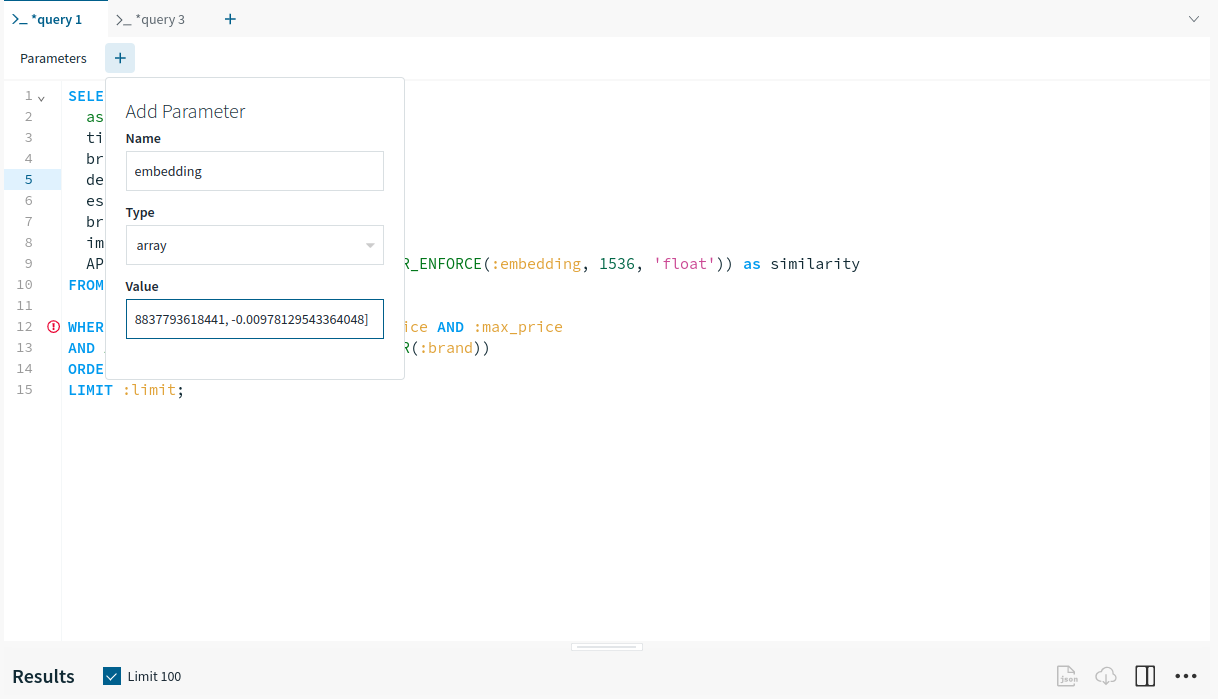

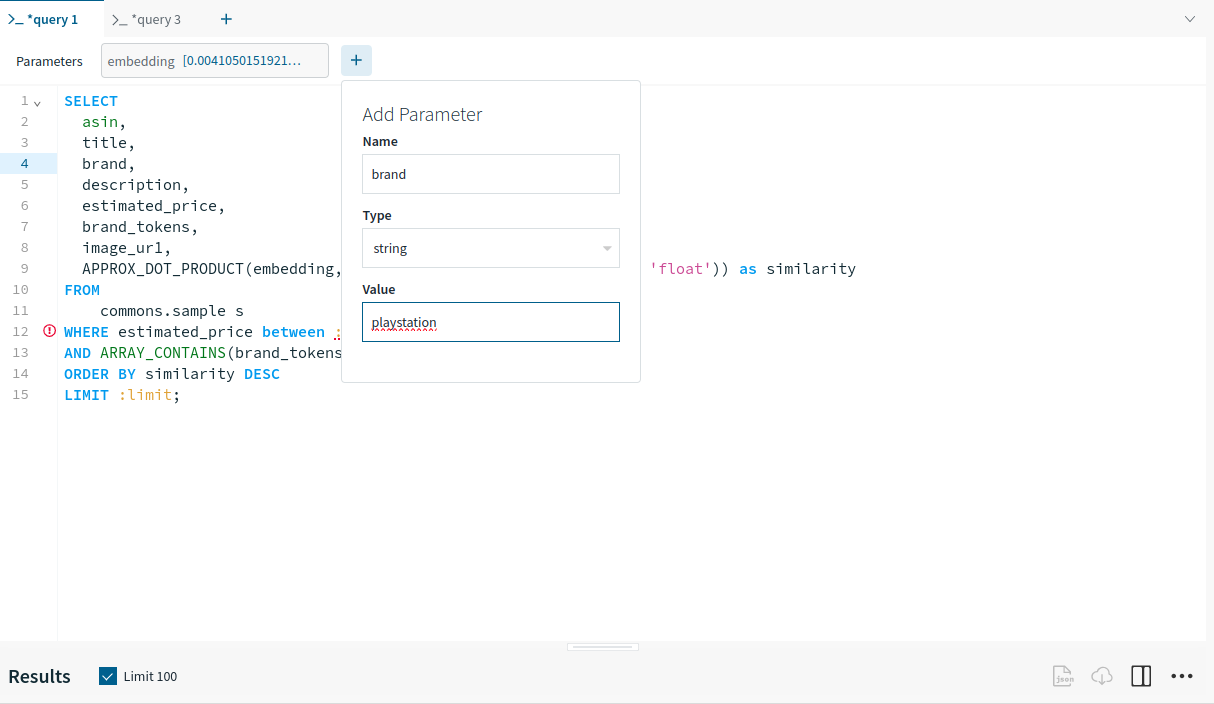

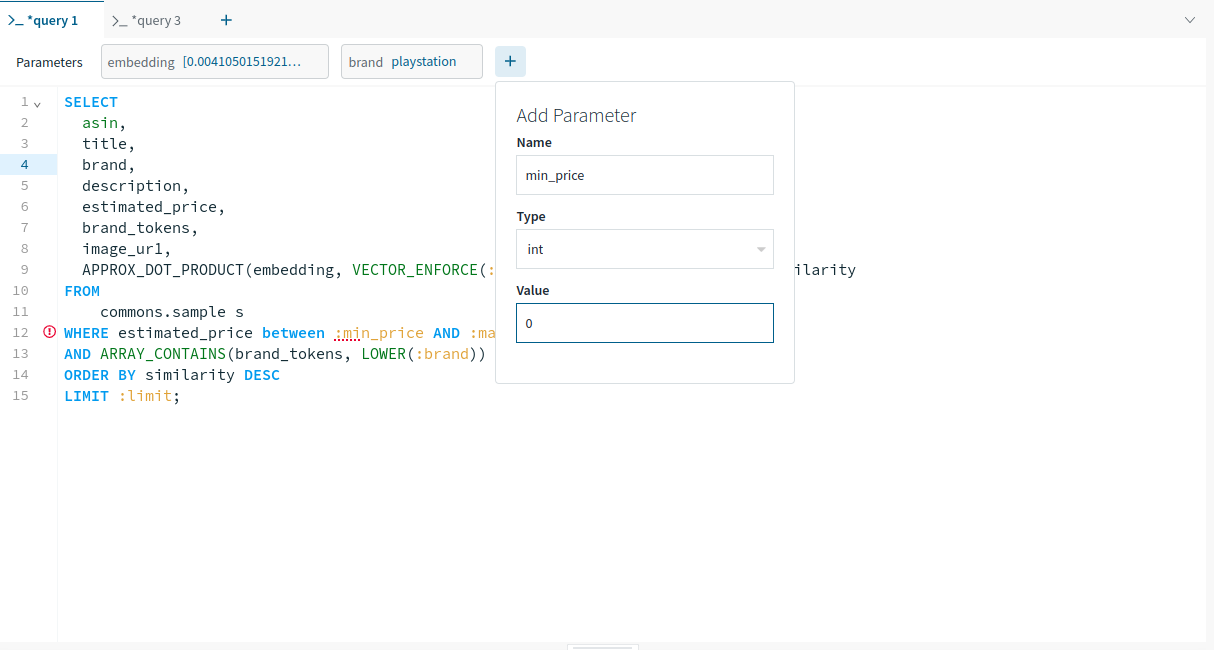

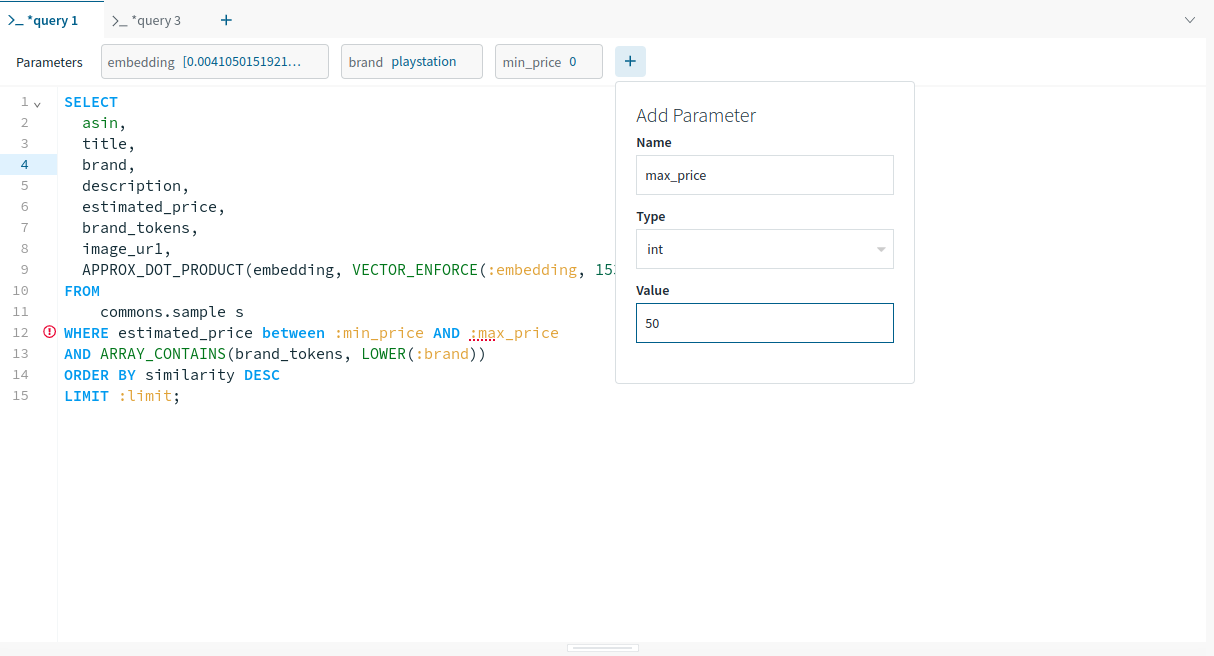

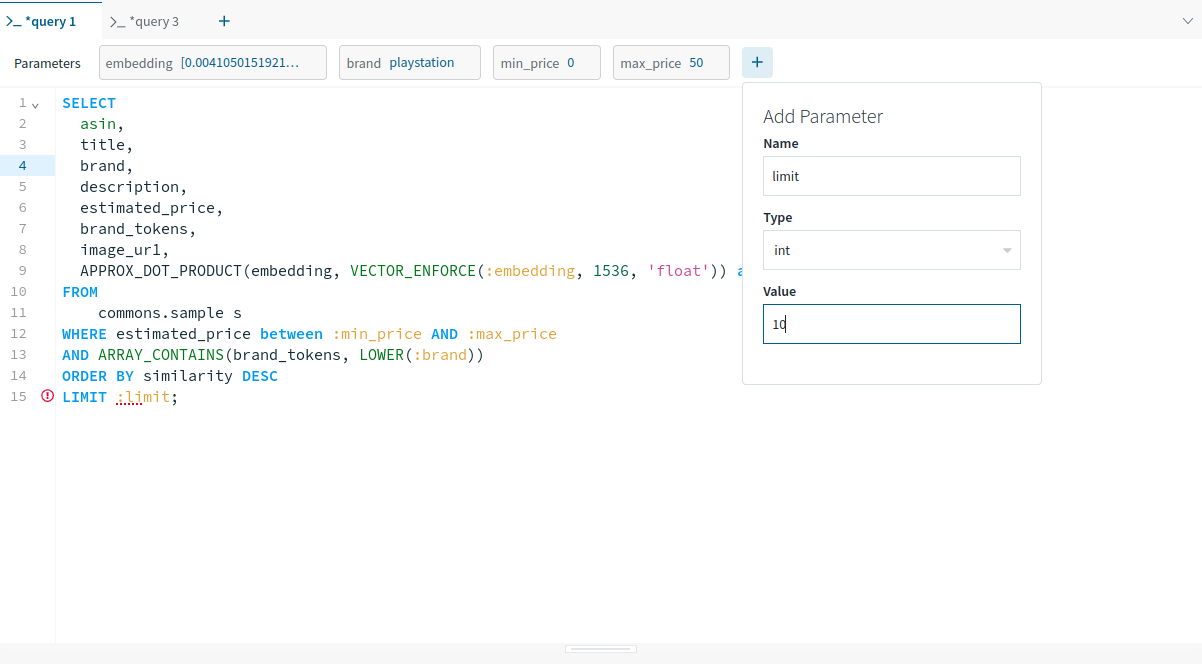

Subsequent, add the parameters one after the other to run the question earlier than saving it as a question lambda.

You need to use the default embedding worth from right here. It’s a vectorized embedding for ‘Star Wars’. For the remaining default values, seek the advice of the photographs under.

Notice: Working the question with a parameter earlier than saving it as Question Lambda is just not necessary. Nevertheless, it’s a very good apply to make sure that the question executes error-free earlier than its utilization on the manufacturing.

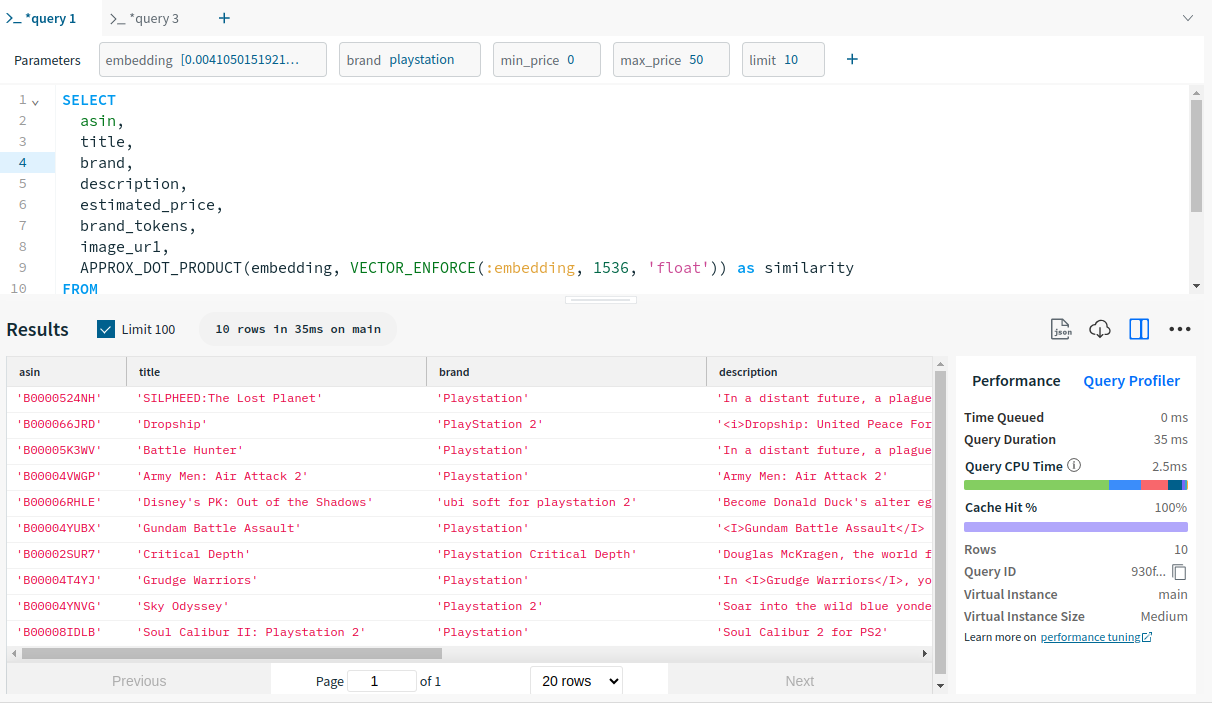

After establishing the default parameters, the question will get executed efficiently.

Let’s save this question lambda now. Click on on Save within the question editor and title your question lambda which is “recommend_games” in our case.

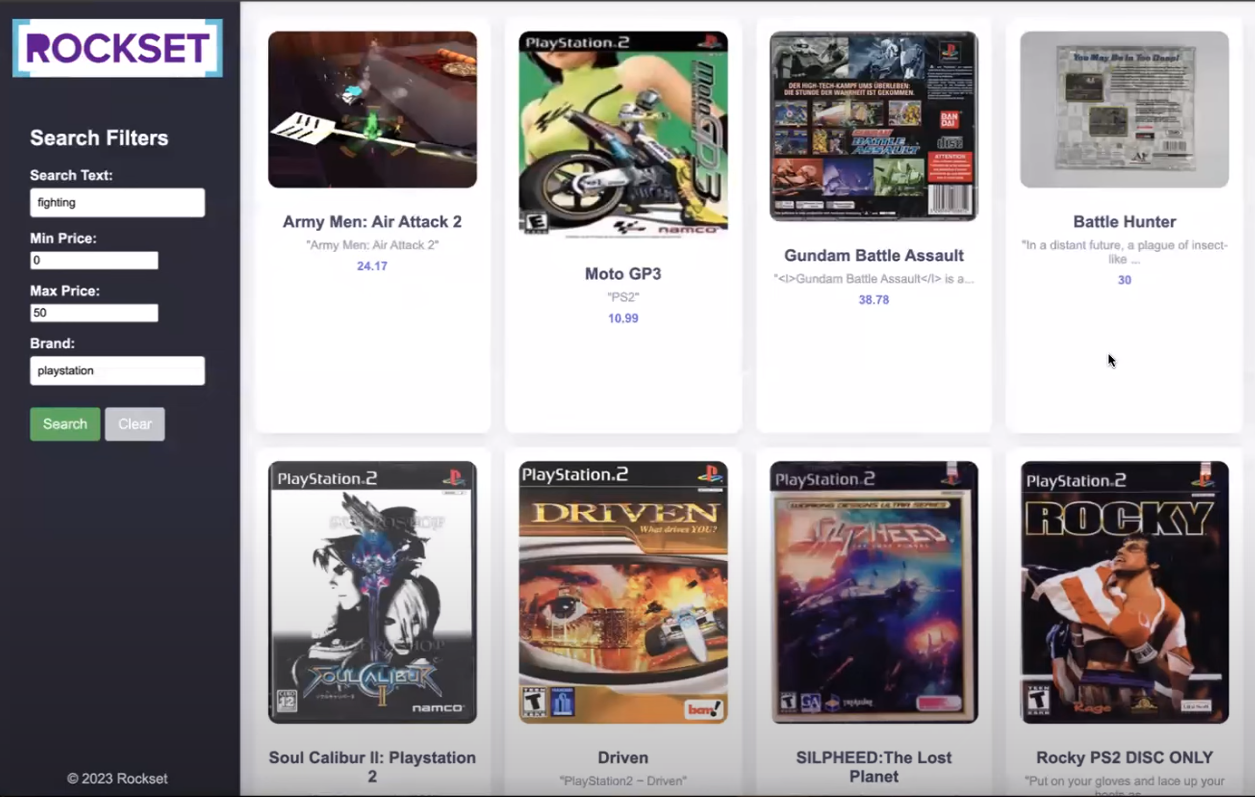

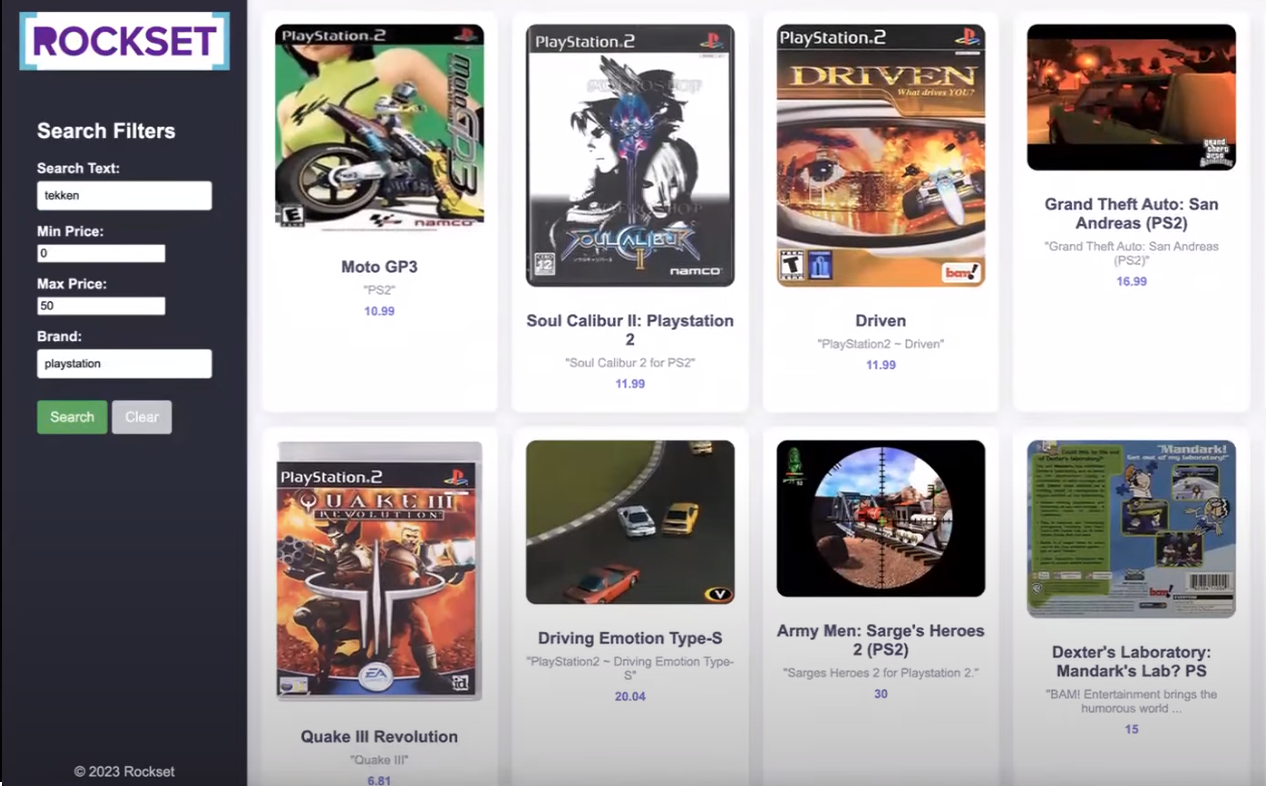

Frontend Overview

The ultimate step in creating an internet utility entails implementing a frontend design utilizing vanilla HTML, CSS, and JavaScript, together with backend implementation utilizing Flask, a light-weight, Pythonic internet framework.

The frontend web page appears as proven under:

-

HTML Construction:

- The fundamental construction of the webpage features a sidebar, header, and product grid container.

-

Sidebar:

- The sidebar incorporates search filters comparable to manufacturers, min and max value, and so on., and buttons for consumer interplay.

-

Product Grid Container:

- The container populates product playing cards dynamically utilizing JavaScript to show product info i.e. picture, title, description, and value.

-

JavaScript Performance:

- It’s wanted to deal with interactions comparable to toggling full descriptions, populating the suggestions, and clearing search kind inputs.

-

CSS Styling:

- Carried out for responsive design to make sure optimum viewing on varied gadgets and enhance aesthetics.

Take a look at the total code behind this front-end right here.

Backend Overview

Flask makes creating internet purposes in Python simpler by rendering the HTML and CSS recordsdata through single-line instructions. The backend code for the remaining tutorial has been already accomplished for you.

Initially, the Get technique can be referred to as and the HTML file can be rendered. As there can be no advice right now, the essential construction of the web page can be displayed on the browser. After that is executed, we will fill the shape and submit it thereby using the POST technique to get some suggestions.

Let’s dive into the primary elements of the code as we did for the frontend:

-

Flask App Setup:

- A Flask utility named app is outlined together with a route for each GET and POST requests on the root URL (“/”).

-

Index operate:

@app.route('/', strategies=['GET', 'POST'])

def index():

if request.technique == 'POST':

# Extract knowledge from kind fields

inputs = get_inputs()

search_query_embedding = get_openai_embedding(inputs, shopper)

rockset_key = os.environ.get('ROCKSET_API_KEY')

area = Areas.usw2a1

records_list = get_rs_results(inputs, area, rockset_key, search_query_embedding)

folder_path="static"

for document in records_list:

# Extract the identifier from the URL

identifier = document["image_url"].cut up('/')[-1].cut up('_')[0]

file_found = None

for file in os.listdir(folder_path):

if file.startswith(identifier):

file_found = file

break

if file_found:

# Overwrite the document["image_url"] with the trail to the native file

document["image_url"] = file_found

document["description"] = json.dumps(document["description"])

# print(f"Matched file: {file_found}")

else:

print("No matching file discovered.")

# Render index.html with outcomes

return render_template('index.html', records_list=records_list, request=request)

# If technique is GET, simply render the shape

return render_template('index.html', request=request)

-

Knowledge Processing Features:

- get_inputs(): Extracts kind knowledge from the request.

def get_inputs():

search_query = request.kind.get('search_query')

min_price = request.kind.get('min_price')

max_price = request.kind.get('max_price')

model = request.kind.get('model')

# restrict = request.kind.get('restrict')

return {

"search_query": search_query,

"min_price": min_price,

"max_price": max_price,

"model": model,

# "restrict": restrict

}

- get_openai_embedding(): Makes use of OpenAI to get embeddings for search queries.

def get_openai_embedding(inputs, shopper):

# openai.group = org

# openai.api_key = api_key

openai_start = (datetime.now())

response = shopper.embeddings.create(

enter=inputs["search_query"],

mannequin="text-embedding-ada-002"

)

search_query_embedding = response.knowledge[0].embedding

openai_end = (datetime.now())

elapsed_time = openai_end - openai_start

return search_query_embedding

- get_rs_results(): Makes use of Question Lambda created earlier in Rockset and returns suggestions primarily based on consumer inputs and embeddings.

def get_rs_results(inputs, area, rockset_key, search_query_embedding):

print("nRunning Rockset Queries...")

# Create an occasion of the Rockset shopper

rs = RocksetClient(api_key=rockset_key, host=area)

rockset_start = (datetime.now())

# Execute Question Lambda By Model

rockset_start = (datetime.now())

api_response = rs.QueryLambdas.execute_query_lambda_by_tag(

workspace="commons",

query_lambda="recommend_games",

tag="newest",

parameters=[

{

"name": "embedding",

"type": "array",

"value": str(search_query_embedding)

},

{

"name": "min_price",

"type": "int",

"value": inputs["min_price"]

},

{

"title": "max_price",

"sort": "int",

"worth": inputs["max_price"]

},

{

"title": "model",

"sort": "string",

"worth": inputs["brand"]

}

# {

# "title": "restrict",

# "sort": "int",

# "worth": inputs["limit"]

# }

]

)

rockset_end = (datetime.now())

elapsed_time = rockset_end - rockset_start

records_list = []

for document in api_response["results"]:

record_data = {

"title": document['title'],

"image_url": document['image_ur1'],

"model": document['brand'],

"estimated_price": document['estimated_price'],

"description": document['description']

}

records_list.append(record_data)

return records_list

Total, the Flask backend processes consumer enter and interacts with exterior companies (OpenAI and Rockset) through APIs to supply dynamic content material to the frontend. It extracts kind knowledge from the frontend, generates OpenAI embeddings for textual content queries, and makes use of Question Lambda at Rockset to search out suggestions.

Now, you’re able to run the flask server and entry it via your web browser. Our utility is up and operating. Let’s add some parameters and fetch some suggestions. The outcomes can be displayed on an HTML template as proven under.

Notice: The tutorial’s complete code is obtainable on GitHub. For a quick-start on-line implementation, a end-to-end runnable Colab pocket book can also be configured.

The methodology outlined on this tutorial can function a basis for varied different purposes past advice methods. By leveraging the identical set of ideas and utilizing embedding fashions and a vector database, you at the moment are outfitted to construct purposes comparable to semantic search engines like google and yahoo, buyer help chatbots, and real-time knowledge analytics dashboards.

Keep tuned for extra tutorials!

Cheers!!!